Green on green camera spraying - a game changer on our doorstep?

Author: Guillaume Jourdain, Bilberry | Date: 26 Feb 2019

Take home messages

- Green on green camera technologies is now used on farms. This will lead to important financial benefits for growers but will also have impacts on farm management

- Growers need to understand the benefits but also the limitations of these new technologies. This is only way it will bring real benefits.

Overview of vision systems for spraying and identifying weeds

In this paper, we will only focus on systems embedded on sprayers or spraying equipment, and thus we will not talk about drones. Drones are a very interesting technology, however there are current limitations on their convenience of use such as regulation, the need for a pilot, necessity of good weather conditions (no wind or rain), and ground resolution is often not as high as with embedded sensors.

Systems on the market and their limitations

Two optical camera systems to spray weeds have been on the market for several years, WEEDit and WeedSeeker. These systems are now commonly used in Australia for green on brown applications. Previous analysis of these can be found in a GRDC Update paper:

GRDC - Sensors offer potential for in-crop decisions

A factsheet from the Australian Society of Precision Agriculture, SPAA

Summary of key facts for these sensors

- Active sensors – chlorophyll sensing

- High number of sensors (one per meter for WEEDit and one per nozzle for WeedSeeker)

- Significant reduction in chemical usage

- High cost ($4000 / meter)

- Day and night usage

- Limited speed (15 km/h for WeedSeeker and 20 km/h for WEEDit)

- Boom stability is important, so wheels are usually added on the booms

- Calibration is needed on the WeedSeeker, while the WEEDit has an autocalibration mode

- Both technologies cannot work on green on green applications.

Systems under development

Many companies, both start-ups, large corporations and universities are now developing systems with green on green capability. The technology used is similar: artificial intelligence with cameras (sometimes RGB/colour cameras, sometimes hyperspectral cameras).

Examples of companies working on green on green technologies:

- Bilberry, a French AI based start-up that specialises in cameras for recognising weeds (more below)

- Blue River Technology, acquired by John Deere in September 2017 for more than $300M, developing a See and Spray technology - a spraying tool with smart cameras, trailed by a tractor, that can spray weeds very accurately at about 10 km/h

- Ecorobotix, a Swiss based start-up developing an autonomous solar robot that kills weeds. They are also developing the camera technology

- AgroIntelli, a Danish company developing an autonomous robot to replace tractors, that will also include spraying capacity. They are also developing the camera technology

- Bosch, the German company, that is more and more involved in agriculture has launched a project call Bonirob a couple of years ago, a robot that includes smart cameras to kill weeds in a more efficient way

Figure 1. From top left to bottom right: Agrointelli robot, Blue River Technology tool, Bosch robot and Ecorobotix robot.

Artificial intelligence to detect weeds

Past research

Recognising weeds within crops is a topic that has been interesting companies and researchers for a very long time. First patents on this topic are from the 1990s. The main approach was to differentiate weeds from crops thanks to their colour and shape. Through mathematical formulas, a range of colours and a range of shapes for each weed (we can call these algorithms conventional algorithms) would be created.

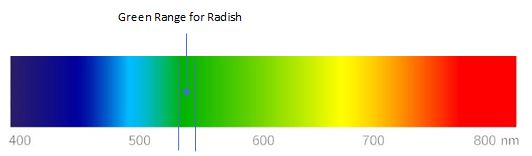

To give a very simplistic example, one could define, through experimentation, that radish colour would be within a specific green range, as shown on the graph below.

Figure 2. Simplistic example of conventional algorithms mechanism

This way of working gave good results in the lab, because they have excellent conditions, that can be replicated easily: the light is constant and homogeneous, there is no wind, all crops and weeds are from the same variety and are not stressed etc. Since all these conditions are very controlled, it is often true that you can differentiate two types of weeds / crops thanks to colour and shape.

However, paddock conditions are completely different. Indeed, the sun can be high or low, in your back or in your eyes, there can be clouds, there can be shadows from the tractor/sprayer cabin or from the spraying boom, crops can be wet in the morning (which would create sun reflection), soils always have different colours …

It became clear that conventional algorithms could not work in field conditions.

Artificial intelligence as a game changer

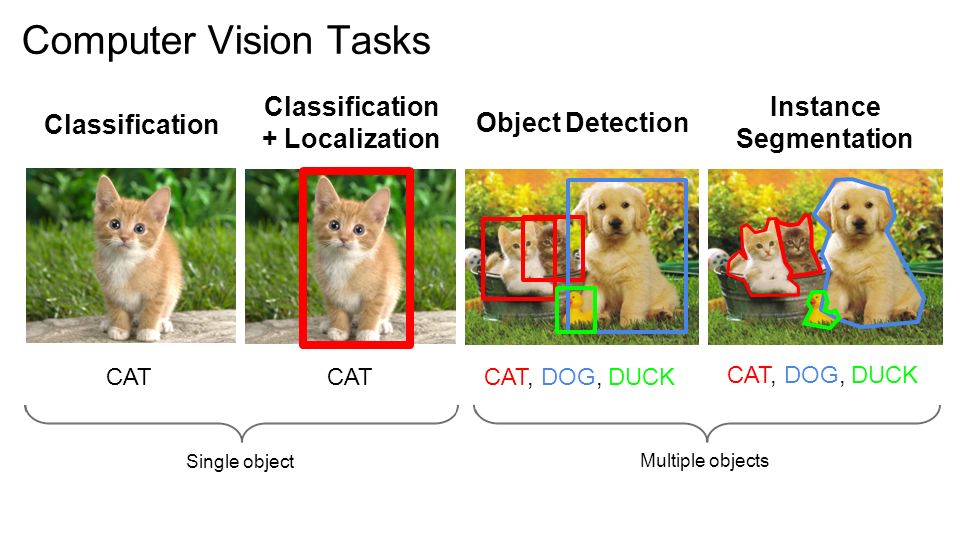

Artificial intelligence and especially deep learning is another way of working on images to recognise different objects. It is now the most widely used technology for computer vision when it comes to complex images (recognising weeds within crops, or on bare soil, is definitely a complex image). Complex images could be defined as images that show high variability between the same category of object (an object being a cat, a dog, a human, or a weed).

Deep learning is part of the family of machine learning and is inspired by the way the human brain works (deep learning often uses deep neural networks architecture). The learning part can be either supervised or unsupervised. We will discuss supervised learning and how to apply it to weed recognition more in depth below.

Below is an example of different kind of deep learning architectures applied to computer vision.

Figure 3. Different deep learning architectures

Deep learning is now possible on embedded systems

Research on deep learning also started in the 90s, however it only became widely used in the 2010s. There are 3 key components needed to develop deep learning applications, and these 3 key components have only been available for very few years. These are:

- Plenty of data

- High computing power

- Powerful algorithms

Data generation has grown at an incredible speed since the early 2000s and the fast development of internet. We now have access to data about almost everything, in very large quantity.

Computing power is needed twice for deep learning: firstly during algorithm training and secondly during the “inference”, which is the moment the algorithm is being used. Deep learning is run on GPUs (Graphics Processing Unit), and these GPUs became really powerful with the development of autonomous vehicles.

Since more powerful processing units were available, more powerful algorithms were also developed by engineers.

The 3 conditions above are now met and so deep learning is therefore applicable to many situations and is especially relevant for farming.

Supervised process for deep learning

Here is the classical process to develop a deep learning algorithm with the supervised method:

- Define algorithm usage and objectives

- Example with weed recognition (WR): Recognize flowering radish in wheat with > 90% accuracy

- Gather data

- WR: Take pictures in the fields of flowering wild radish in wheat

- Sort and label data

- WR: On each picture, indicate what is wheat, what is wild radish etc.

- WR: Also separate all images into 2 sets, training set and testing set. Training set is only used for training, and testing set is only used for testing (images cannot be on both sets)

- Train algorithm

- WR: Show the training set (thousands of times) to the algorithm so that it can learn patterns

- Test algorithm

- WR: Show the test set (one time) to the algorithm to compare the results of the algorithm with the reality

- WR: Once happy with the results of the algorithms, go into the paddock to test (paddock testing is the most crucial part of the process)

- Note: It NEVER works first time …

- Repeat until you reach your objectives

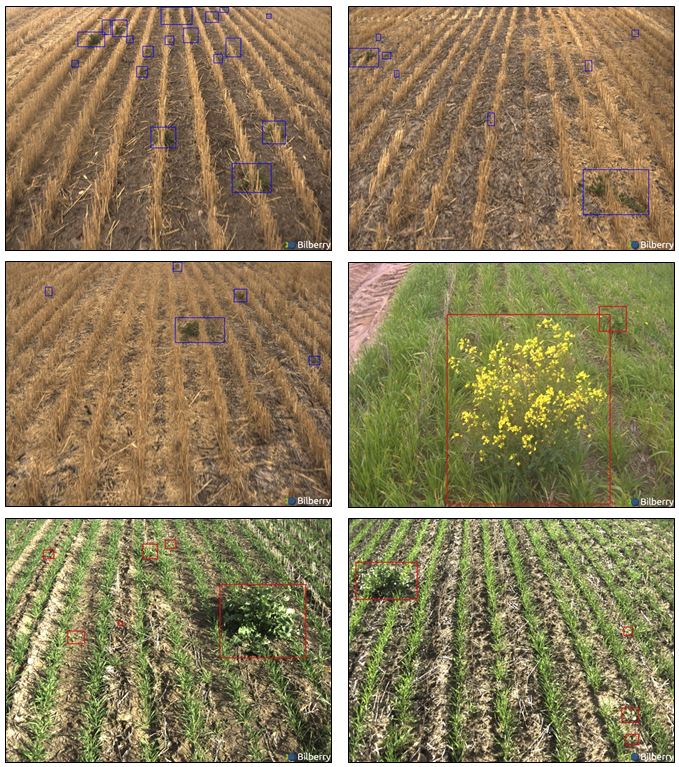

Two of the most important steps are data gathering and paddock testing (these 2 steps happen in the field). What is especially complex and important about data gathering is to be able to capture the diversity of situations. Below is an example of different situations, where the aim is to spray any live weed on bare soil.

Figure 4. Different situations for summer spraying in Australia (sandy soils, high stubble, no stubble)

Research and results at Bilberry

Bilberry presentation

Bilberry was founded in January 2016 by three French engineers, with the idea to use artificial intelligence to help solve problems in agriculture. The main product of Bilberry is now embedded cameras on sprayers. They scan the paddocks to recognise the weeds and then control the spraying in real time to spray only on weeds and not the whole paddock. Bilberry also develops cameras that recognize weeds on rail tracks. The technology is similar, with just a higher speed (60 km/h) and day and night applications.

The biggest focus to develop this product is now Australia, with several sprayers already equipped with Bilberry cameras. One of the reasons of this focus is the huge interest among Australian growers and agronomists towards green on green spot spraying.

On the booms of the sprayer, there is one camera every 3 meters and then computing modules (to process the data) and switches (to distribute power and data to each camera). In the cabin, there is one screen to control the system.

Figure 5. Bilberry cameras on an Agrifac 48 metre self-propelled sprayer

Results achieved until now

Three algorithms are now validated and usable directly by growers in the field (two are more focused on Australian growers):

- Weeds on bare soil detection (using AI, but same application as WEEDit or WeedSeeker)

- Rumex (dock weed) in grasslands

- Wild radish in wheat (especially when they are flowering) (link to a video for wild radish spraying, watch in HQ to see the sprays better:

It is important to note that large chemical savings are made with the cameras, however it is also a very interesting tool to fight resistant weeds, potentially enabling the use of products that cannot be currently used in crop due to either cost or crop impact.

Below are some pictures taken by our cameras and what is seen by the algorithm.

Main usage conditions of the Bilberry camera

The cameras are used at up to 25 km/h speed and can be used on wide booms (widest boom used is 49 metres, but could be more if needed). This means there is a very high capacity with the sprayer equipped with cameras.

Theoretical camera capacity = 25 km/h * 48 meters = 120 ha/hour

In real spraying conditions, capacity is of course lower, since the speed is not always 25 km/h and the sprayers need to be refilled.

Summer spraying in Australia (New South Wales example)

One Agrifac 48 metre boom is equipped with cameras in a farm in New South Wales. Before the cameras were used by the grower and his team, a comparative test was made with current camera sprayer technology. It was then decided to use Bilberry cameras as much as possible on the farm.

Figure 7. Test field after spraying with dye

Thus, the cameras have been used since the beginning of the 2018-2019 summer spraying season, directly by the grower and his team. Over a 3 weeks period, here are the most important figures:

Table 1. Figures from the 2018-2019 summer spraying season

Total area sprayed | Ha / day | Ha / hour | Chemical savings |

|---|---|---|---|

6199 ha | 413 ha | 75 ha | 93.5% |

The carrier volume used was generally set at 150 litres / ha.

It is very important to note that the chemical savings are directly linked to the extent of weed infestation in the paddocks. A paddock with high weed infestation will get little savings whereas a paddock with low weed infestation will get high savings.

Spraying dock weeds in grasslands (Netherlands)

In the Netherlands, a 36 meters Agrifac boom is equipped with Bilberry cameras and uses an algorithm to spray dock weeds on grasslands. The same testing process as described earlier was used to ensure the algorithm was working properly.

Once the grower validated that the algorithm was working, it was used during the whole spraying season. About 500 ha were sprayed during the season, and the average chemical savings were above 90 %. The cost of the chemical is about 50€/ha for this specific application, which means 45€ chemical savings / ha with the cameras.

Here is a link to see the machine spraying dock weed (to see the sprays happening, play the video in high quality).

Future machine capabilities

Obviously, the biggest focus is to develop new weeding applications (which means new algorithms) to be able to use the cameras more often.

Other important development focuses we have right now include:

- Working at night (already working on rail tracks, but not on sprayers)

- Working at 30 km/h

- Delivering a weed map after a spray run (already working on rail tracks, but not on sprayers), to compare with the application map

- New weed applications

In the future, we believe that every time the sprayer goes in the field, the cameras should be able to bring value to the grower. Sometimes it would mean direct application (for instance for weed spraying) and other times it would mean building maps (maps to give growth stage throughout the paddock or disease status or anything that could help growers and agronomists do their job).

We will also look into algorithms for modulating nitrogen and fungicide applications.

In a completely opposite direction, spraying with cameras will generate a lot of data. The data will be very precise (because the data is saved with the GPS coordinates) and will give agronomists and farmers new tools to improve their overall farm management strategy.

Concrete implications for growers

Cameras that detect green on green bring multiple new possibilities for growers. The most important and immediate consequences are new possibilities to fight resistant weeds and impressive chemical savings and reduced herbicide environmental load. The potential to reduce the area of crop sprayed with in-crop selective herbicides, may also assist by reducing stress on stress interactions that are sometimes associated with in-crop herbicide use.

It is also very important to note that, as for any new technologies, it will only work well if growers get to know the technology, how it works, its limitations and possibilities. The first and most important thing for growers will be to be very attentive to the results of each spraying: first, are all weeds killed, and second, how much did I save? The cameras might work perfectly on 90% of their paddocks, and for some reason not perform as well on 10%. This can definitely be corrected within the algorithms (see above how to train an algorithm), but to correct an algorithm the designer of the cameras must be made aware there is an issue.

Acknowledgements

Presenting this green on green technology has been possible thanks to the interest and passion of the GRDC Update coordinators and the support of the GRDC to bring me to Australia to present at the Updates. I would also like to express my thanks to the first growers that believed in Bilberry in Australia.

Contact details

Guillaume Jourdain

Bilberry

44 avenue Raspail – 94250 – Gentilly - FRANCE

Email: guillaume@bilberry.io

Was this page helpful?

YOUR FEEDBACK