Future farming: Machine vision for nitrogen assessment in grain crops

Author: Alison McCarthy (University of Southern Queensland) and Craig Baillie (University of Southern Queensland) | Date: 10 Sep 2020

Take home message

- A pilot simulation and field study demonstrated potential for machine vision to estimate in-season soil and leaf nitrogen status using cameras and image analysis for real-time sensing and control

- The modelled soil and leaf nitrogen were estimated with >80% accuracy using machine vision-detectable crop features estimated in combination with known underlying soil variability

- Further trials will evaluate and refine the machine vision system at the Future Farm core sites.

Aim

This aim of this research was to identify how machine vision could complement and be incorporated with soil sampling and other sensing technologies in a more automated nitrogen sensing system.

Introduction

Nitrogen management is vital to maximise agricultural crop yields. Nitrogen requirements can vary spatially over fields because of different soil properties and crop conditions. Nitrogen status is typically assessed using soil testing, grain protein levels and paddock history and applied prior to sowing. This pre-season nitrogen application sets approximately 80% of the average yield outcome. In-season top-up nitrogen is typically applied in high rainfall zones and irrigated crops which contribute to the remainder of the average yield outcome. In-season nitrogen status can be agronomically assessed from tiller counts, stand appearance and plant structure in some southern grains systems (Miller and Schober 2018; Voight 2019). Infield sampling may not be practical for timely in-season nitrogen management decisions and collection of tiller and density counts is typically labour-intensive.

Automated systems have been developed using reflectance sensing to increase the spatial and temporal resolution of assessments. Reflectance sensors (e.g. Crop CircleTM) that measure vegetation indices from spectral reflectance and cameras that assess colour have been used for crop vigour assessments to estimate nitrogen content (Li et al. 2010; Porter 2010; Wang et al. 2014 and dos Santos et al. 2016). The measured reflectance of the crop can be compared with that of N-minus and N-rich strips to estimate nitrogen content with a linear regression and machine learning. For example, a type of machine learning, ‘support vector machine’, has been used to quantify nitrogen status from hyperspectral data (Chlingaryan et al. 2018). Support vector machines analyse data for classification and regression analysis and is particularly suited to high-dimensional data as it reduces overfitting. However, reflectance sensing (e.g. NDVI) has inconsistent correlations to nitrogen status across different stages and seasons (Poole and White 2008; Poole and Craig 2010).

Simulation modelling can also be used to automated nitrogen status estimation (Lawes et al. 2019) using the APSIM crop model). This involves linking available online weather, soil information and satellite imagery, with iterative simulations to estimate daily soil and leaf nitrogen requirements. This requires a high level of computing capacity and skill, which is not currently feasible in all regions and commercial cropping situations.

An alternative approach uses existing machine vision systems to compare agronomic crop features in N-minus and N-rich strip trials, providing a rapid, on-the-go machine vision nitrogen sensor. Machine vision systems have been developed for tiller counting by extracting individual leaves using colour thresholding and line detection (Boyle et al. 2015; Wu et al. 2019); and for plant density detection using colour thresholding and machine learning (Jin et al. 2017; Liu et al. 2017). Deep learning machine vision algorithms have also been used in agricultural image segmentation to detect crop flowering and fruiting structures in orchards (Chen et al. 2018; Kamilaris and Prenafeta-Boldú 2018; Koirala et al. 2020). Muñoz-Huerta et al. (2013) identified that more research is required for use of machine vision to determine crop nitrogen status, particularly to reduce the sunlight dependence on machine vision system performance. Fieldwork and simulation analysis have been conducted to identify how machine vision could complement and be incorporated with other sensing technologies in an automated nitrogen sensing system.

Method

Field site and data collection

Fieldwork was conducted to collect a dataset of replicated machine vision data from different cameras and plots, and APSIM simulations were conducted to estimate soil and leaf nitrogen status throughout the season for comparison. Barley was planted over a 0.4 ha area in USQ’s agricultural plot on 9 August 2018 and harvested on 10 December 2018. Nitrogen was applied uniformly over the crop at planting and irrigation was applied on 9 August (30mm), 6 September (15mm) and 29 September (30mm). Soil moisture, plant height, canopy width and tiller counts were collected weekly between August and December 2018 for the barley trial in nine locations in a grid. Weekly soil nitrate-N, ammonium-N and leaf nitrogen were modelled using APSIM on the days of the plant measurements. APSIM was parameterised using the management information, soil characterisation samples (Hussein 2018), infield automatic weather station and soil nitrate-N and ammonium-N samples collected at harvest.

The machine vision systems compared were: (i) infield fixed cameras capturing oblique images every 3 hours; (ii) UAVs capturing oblique images in the visible waveband weekly; and (iii) multi-spectral Parrot Sequoia camera capturing top view images weekly. The multi-spectral imagery was used to collect spectral reflectance and estimate NDVI (normalized difference vegetation index) and NDRE (normalized difference red edge).

Comparing performance of nitrogen status algorithms

The literature review identified algorithms with potential to automatically determine nitrogen requirement from plant measurements in commercial fields which could be compared with different data inputs to identify which measurements to use: crop features (e.g. crop height, width, tiller counts), spectral reflectance (NDVI, NDRE, greenness) and soil water status (soil water content, drained upper limit). The nitrogen status algorithms evaluated are described below:

- Linear regression algorithm between all individual sensed measurements and N status

- Linear scaling algorithm which is a regression algorithm between sensed measurements in N-minus and N-rich plots in each management zone and sensed measurements in other zones with similar properties (e.g. soil water status, drained upper limit, sowing density) to estimate nitrogen status with a linear scaling algorithm. The linear scaling algorithm is an extension of the linear regression approach which compared spectral reflectance/soil water status/crop features in all the plots and did not consider underlying variability which may have caused errors in the nitrogen estimation. In contrast, the linear scaling algorithm estimates nitrogen status in the crop considering the underlying variability in soil and crop properties. This is achieved by comparing the spectral reflectance/soil water status/crop features in the crop with crops in the N-minus and N-rich plots with the most similar measured soil water, estimated drained upper limit and measured sowing density.

- Machine learning algorithms linking single or multiple data streams using most influential data inputs for nitrogen. The influence of each data type was compared using a random forest classifier, while eight types of machine learning that were trained using the collected data were compared in Spyder®: logistic regression, support vector machines, random forest classifier, extra trees classifier, linear discriminant analysis, neural network, decision tree classifier and naïve Bayes. The datasets were broken into training and testing data sets with 50% of the data in each. The parameters in each machine learning algorithm were optimised before implementation. The machine learning algorithms were implemented with different input data combinations based on the feature importance results. Four sets of training data sets were evaluated: spectral reflectance with and without soil water status, crop features and all.

The linear regression algorithms and machine learning models were developed from datasets captured in Zadok’s growth stages GS25-GS30 and compared with the raw measured data from the field study. The algorithms were evaluated with different manually measured crop features to identify which inputs to target in a machine vision system.

Evaluating robustness of machine vision algorithms to lighting

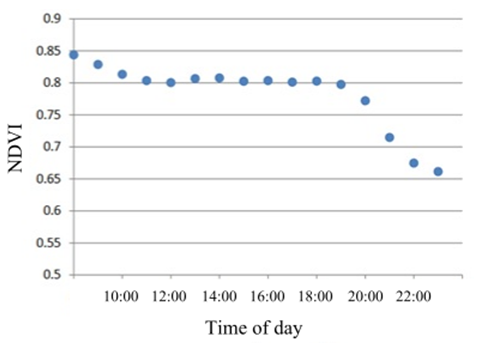

Machine vision systems (e.g. optical sensors, cameras) can be affected by the time of day, which can influence the nitrogen status assessment. From Figure 1, NDVI can vary by 6.3% between 8am and 11am and 1.3% between 11am and 7pm. The performance of simple colour threshold algorithms to measure canopy cover and greenness, were compared using images from infield cameras taken at different times during the day: early morning (5-8am), morning (8-11am), midday (11am-2pm), afternoon (2-5pm) and late afternoon (5-8pm). This would identify which times of day were optimal for data collection.

Figure 1. Relationship between GreenSeeker® NDVI sensor readings and time of day

(adapted from Porter 2010).

Results

Linear regression algorithm for estimating nitrogen status

Table 1 compares the performance of linear regressions fitted between modelled soil and leaf nitrogen status and measurements of spectral reflectance (NDVI and NDRE), soil water status (volumetric soil water content and estimated drained upper limit) and crop features (height, width and tiller counts). These are shown as correlations of determination between 0 and 1 which are low and high correlations, respectively. These linear regressions were fitted on each day of machine vision and crop ground truthing data collection and the values shown in Table 1 were for the data types with the highest coefficients of determination. These were fitted for data between emergence (GS00) and harvest (GS99) and in-season nitrogen decisions are typically made by GS30.

From Table 1 , individual factors measured were only partially correlated with N status with correlations of determination of <0.4. This may indicate that multiple data types may be required to estimate nitrogen status, or there were errors in modelled soil and leaf nitrogen status from APSIM. This could also indicate that the field measurements were affected by factors other than nitrogen (e.g. soil characteristics and sowing density) which provided a level of error in measurement that needs to be reduced if a more accurate estimate of N status is to be made.

Simulated results showed the highest correlations between crop features and modelled leaf and soil nitrogen at GS30 which is the latest stage that in-season nitrogen decisions would be made. There was a low correlation between modelled nitrogen status and soil water status, spectral reflectance and crop features at most of the earlier and later growth stages.

Table 1. Data types at different days after sowing with the highest correlation for leaf and soil nitrogen. Variables with the highest correlations are closer to one and highlighted in grey.

Days after sowing | Zadok’s growth stage | Modelled leaf N (g/m2) | Modelled NH4-N (ppm) | Modelled NO3-N (ppm) | |||

|---|---|---|---|---|---|---|---|

Data type | R² | Data type | R² | Data type | R² | ||

36 | 25 | Soil water status | 0.389 | Crop features | 0.289 | Crop features | 0.351 |

43 | 25 | Crop features | 0.285 | Spectral reflectance | 0.217 | Crop features | 0.251 |

50 | 30 | Crop features | 0.749 | Soil water status | 0.530 | Crop features | 0.781 |

60 | 42 | Soil water status | 0.391 | Spectral reflectance | 0.658 | Spectral reflectance | 0.564 |

67 | 59 | Crop features | 0.211 | Spectral reflectance | 0.692 | Spectral reflectance | 0.704 |

71 | 66 | Crop features | 0.296 | Crop features | 0.384 | Spectral reflectance | 0.375 |

78 | 72 | Soil water status | 0.462 | Soil water status | 0.285 | Crop features | 0.224 |

85 | 76 | Spectral reflectance | 0.340 | Spectral reflectance | 0.512 | Spectral reflectance | 0.409 |

93 | 81 | Spectral reflectance | 0.418 | Spectral reflectance | 0.666 | Spectral reflectance | 0.591 |

99 | 84 | Spectral reflectance | 0.345 | Spectral reflectance | 0.584 | Spectral reflectance | 0.411 |

105 | 90 | Spectral reflectance | 0.061 | Spectral reflectance | 0.369 | Crop features | 0.379 |

113 | 99 | Soil water status | 0.210 | Soil water status | 0.313 | Crop features | 0.379 |

Linear scaling algorithm for estimating nitrogen status

Table 2 compares how well the linear scaling algorithm estimated leaf and soil nitrogen status. Using crop features in the linear scaling algorithm produced the highest accuracy in estimating nitrogen status (82.3-87.4%). This high accuracy was consistent for all sources of underlying variability. There was a lower correlation between spectral reflectance and nitrogen status (66.2-70.4%). This indicates that crop features could be used without spectral reflectance for estimating nitrogen status, and that only one underlying variability field map (e.g. drained upper limit from the CSIRO Soil and Landscape Grid of Australia) could be used.

Table 2. Comparison of percentage accuracy for estimating soil and leaf nitrogen using a linear scaling algorithm considering different types of underlying variability.

Underlying variability data considered | Accuracy using spectral reflectance (%) | Accuracy using crop features (%) |

|---|---|---|

Soil water | 67.3±6.5 | 85.1±6.8 |

Drained upper limit | 70.4±11.5 | 85.4±7.4 |

Sowing density | 66.2±5.6 | 87.4±7.2 |

Soil water, drained upper limit and sowing density | 66.8±6.6 | 82.3±10.8 |

Machine learning algorithm for estimating nitrogen status

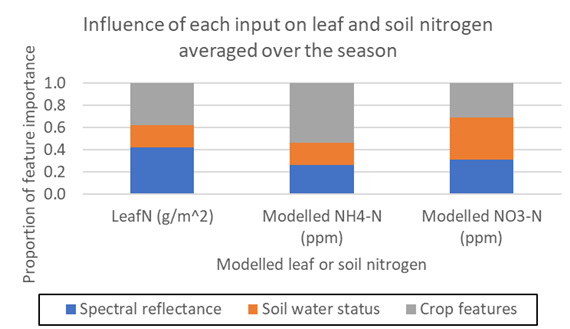

Figure 2 compares the relative importance of using spectral reflectance, soil water status or crop features to reflect leaf nitrogen, soil ammonium-N and soil nitrate-N during GS25-GS30. The spectral reflectance had the largest influence on modelled leaf nitrogen status (42%), whilst crop features had the largest influence on modelled soil ammonium-N (54%). Soil water status and crop features contributed equally to modelled soil nitrate-N (38% each). This indicates that multiple data inputs (e.g. soil water and crop features or spectral reflectance and crop features) may be required to estimate both leaf and soil nitrogen status.

Figure 2. Comparison of feature importance for each input data type on modelled leaf and soil nitrogen for GS25-GS30.

Table 3 compares the percentage accuracy of each machine learning model with the six data input combinations for determining modelled leaf nitrogen, soil ammonium-N and soil nitrate-N. The accuracies using machine learning on the training dataset were generally low (<60%) but higher than the linear regression nitrogen algorithms (<40%) and lower than the linear scaling algorithms (>60%). This indicates that a larger training dataset is required for machine learning model training, potentially with additional ramped nitrogen treatments.

The highest accuracies were achieved using all data (59.2%) or spectral reflectance and soil water status (54.2%). Of the eight evaluated machine learning algorithms, support vector machines produced the highest overall accuracies (52.7%). The superior performance of the support vector machines over the other machine learning models may be caused by the reduced overfitting that is inherent in these types of machine learning models.

Table 3. Comparison of averaged percentage accuracy in modelled soil and leaf nitrogen using different machine learning models and data input combinations with those with the highest correlations highlighted in grey.

Data input combination | Logistic regression | Support vector machines | Random forest | Extra trees | Linear discrimination analysis | Nearest neighbour | Decision tree | Naïve Bayes |

|---|---|---|---|---|---|---|---|---|

All | 51.7±7.3 | 59.2±2.7 | 44.4±20.6 | 46.1±16.5 | 47.6±3.2 | 47.9±14.1 | 47.7±5.6 | 41.6±19.4 |

Spectral reflectance | 52.2±9.3 | 51.1±11.2 | 44.0±9.1 | 44.4±12.8 | 51.3±6.1 | 46.8±11.4 | 49.0±0.9 | 39±14.1 |

Spectral reflectance and soil water status | 50.2±4.9 | 54.2±5.5 | 45.0±17.3 | 53.1±5.5 | 45.2±1.9 | 49.6±11.6 | 51.7±5.4 | 40.8±13.9 |

Crop features | 44.4±7.2 | 46.3±5.8 | 38.8±7.4 | 48.6±4.2 | 40.9±12.1 | 42.7±15.6 | 48.8±2.7 | 39.1±18.0 |

Considerations for machine vision development for automated nitrogen status sensing

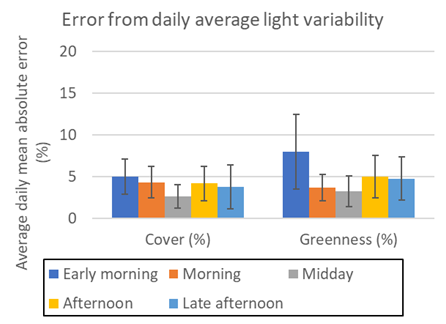

Figure 3 shows the average difference in cover and greenness across the plots at different times of the day. The errors in cover and greenness because of lighting variation during the day were generally low and under 5%. The errors in cover and greenness were lowest at midday (2.6% and 3.3%, respectively), and highest in the early morning (4.8% and 8.0%, respectively). The results from the machine vision camera are consistent with the optical sensor (Porter 2010) with the largest errors in the early morning. The machine vision sensor has lower errors than the optical sensor during the later morning (4.3% for cover and 3.7% for greenness). Therefore, the impact of time of day on machine vision sensing is comparable with optical sensors.

Figure 3. Daily mean absolute error in cover and greenness from infield cameras at different

times of day with different lighting conditions.

Discussion

A pilot simulation and field study was conducted to establish the role of machine vision in a nitrogen sensing system. The performance of three nitrogen status algorithms were compared with modelled soil and leaf nitrogen and different combinations of measured data: spectral reflectance (current standard practice), soil water status and crop features that could be measured using machine vision.

A linear regression algorithm using single data inputs produced correlations that were generally low (<0.5) for all data types. This suggests that more than one data input is required to estimate nitrogen status. A linear scaling algorithm that also used underlying variability in sowing density and soil water status produced higher accuracy for estimating modelled soil and leaf nitrogen than linear regression. The highest accuracy was achieved using inputs of crop features (82.3-87.4%), and lower correlation was achieved using spectral reflectance (66.2-70.4%). This indicates that machine vision detectable crop features may improve indication of nitrogen status when compared with the current standard of spectral reflectance.

Machine learning algorithms had improved repeatability over linear regression but lower accuracy than the linear scaling algorithms with test accuracies of <60%. The highest accuracies were achieved using all data (59.2%) or spectral reflectance and soil water status (54.2%). The highest overall accuracies were achieved using the support vector machines (52.7%). A larger training dataset may be required with additional multiple rate nitrogen treatments to improve the machine learning algorithm performance.

Machine vision-estimated crop features may be impacted by time of day and lighting. However, this impact was comparable with optical sensors, with errors in cover and greenness over any day being <5%.

Conclusions

Machine vision has potential to improve nitrogen status estimation by sensing crop features in N-minus and N-rich plots. A linear scaling algorithm had an accuracy of 82.3-87.4% for modelled leaf nitrogen using plant features with underlying soil variability. This outperformed the same algorithm using spectral reflectance by 15%. Linear regression and machine learning models produced lower accuracies for modelled soil and leaf nitrogen status, potentially because additional data may be required for training. Further work will involve transferring the machine vision system and nitrogen status algorithms to Future Farm core sites nationally. This will enable refinement of the machine vision algorithms (e.g. deep learning) and evaluation of the nitrogen status algorithms with in-field measurements of soil and leaf nitrogen status.

Acknowledgements

The research undertaken as part of this project is made possible by the significant contributions of growers through both trial cooperation and the support of the GRDC, the author would like to thank them for their continued support. The authors are grateful to Mr Jake Humpal for collect ground truthing measurements and USQ's Centre for Crop Health for managing the barley trial site.

References

Boyle, R.D., Corke, F.M.K. and Doonan, J.H. (2015) Automated estimation of tiller number in wheat by ribbon detection. Machine Vision and Applications 27:637–646.

Chen, L., Papandreou, G., Kokkinos, I., Murphy, K. and Yuille, A.L. (2018) DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. 2018, 40, 834–848.

Chlingaryan, A., Sukkarieh, S and Whelan, B (2018) Machine learning approaches for crop yield prediction and nitrogen status estimation in precision agriculture: A review. Computers and Electronics in Agriculture 151:61-69.

dos Santos, M.M.M., de Paiva Alves, E., Silva, B.V., de Oliveira Reis, F. Partelli, F.L., Cecon, P.R., and Braun, H. (2016) Application of digital images for nitrogen status diagnosis in three Vigna unguiculata cultivar, Australian Journal of Crop Science 10(7):949-955

Hussein, M.A.H. (2018) Agronomic and economic performance of arable crops as affected by controlled and non-controlled traffic of farm machinery. PhD Thesis, University of Southern Queensland.

Jin, X., Liu, S., Baret, F., Hemerlé, M. and Comar, A. (2017) Estimates of plant density of wheat crops at emergence from very low altitude UAV imagery. Remote Sens Environ. 198:105–114.

Kamilaris, A. and Prenafeta-Boldú, F.X. (2018) Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90

Koirala, A., Walsh, K.B., Wang, Z. and Anderson, N. (2020) Deep Learning for Mango (Mangifera indica) Panicle Stage Classification. Agronomy 10(1):143.

Lawes, R.A., Oliver, Y.M. and Huth, N.I. (2019) Optimal nitrogen rate can be predicted using average yield and estimates of soil water and leaf nitrogen with infield experimentation, Biometry, Modelling, and Statistics 111:1155-1164.

Li, Y., Chen, D., Walker, C.N., and Angus, J.F (2010) Estimating the nitrogen status of crops using a digital camera. Field Crop. Res. 118:221–22.

Liu, S.Y., Baret, F., Andrieu, B., Burger, P. and Hemmerlé, M. (2017) Estimation of wheat plant density at early stages using high resolution imagery, Front. Plant Sci., 8:739.

Miller, J.O and Shober, A. (2018) Complete Wheat Tiller Counts Now to Determine the Need for Spring Nitrogen, University of Delaware, viewed 13 June 2019, https://extension.udel.edu/weeklycropupdate/?p=11489

Muñoz-Huerta, R.F. Guevara-Gonzalez, R.G., Contreras-Medina, L.M., Torres-Pacheco, I., Prado-Olivarez J. and Ocampo-Velazquez, R.V. (2013) A Review of Methods for Sensing the Nitrogen Status in Plants: Advantages, Disadvantages and Recent Advances, Sensors 13:10823-10843.

Poole, N. and White, B. (2008) Use of crop sensors for determining N application during stem elongation in wheat, Project report GRDC SFS000015.

Poole, N. and Craig, S. (2010) Use of crop sensors for determining N application during stem elongation in wheat, Project report GRDC SFS000015.

Porter, W. (2010) Sensor Based Nitrogen Management for Cotton Production in Coastal Plain Soils, Master of Science Thesis, Clemson University.

Voight, D.G. (2019) Wheat Stand Assessment, Penn State Extension, viewed 13 June 2019, https://extension.psu.edu/wheat-stand-assessment-2Wang, Y., Wang, D., Shi, P. and Omasa, K. (2014) Estimating rice chlorophyll content and leaf nitrogen concentration with a digital still color camera under natural light. Plant Methods. 10:1-11.

Wu, D., Guo, Z., Ye, J., Feng, H., Liu, J., Chen, G., Zheng, J., Yan, D., Yang, X., Xiong, X., Liu, Q., Niu, Z., Gay, A.P., Doonan, J.H., Xiong, L. and Yang, W. (2019) Combining high-throughput micro-CT-RGB phenotyping and genome-wide association study to dissect the genetic architecture of tiller growth in rice, Journal of Experimental Botany, 70(2):545-561.

Contact details

Alison McCarthy

Centre for Agricultural Engineering, University of Southern Queensland

West Street, Toowoomba QLD 4350

Ph: 0458 845 858

Fx: 07 4631 1870

Email: mccarthy@usq.edu.au

TM Trademark

® Registered trademark

GRDC Project Code: CSP1803-020RMX,

Was this page helpful?

YOUR FEEDBACK