Implications of robotics and autonomous vehicles for the grains industry

Author: Mark Calleija (Australian Centre for Field Robotics (ACFR), The University of Sydney) | Date: 13 Feb 2018

Take home messages

- The Australian Centre for Field Robotics (ACFR) is one of the largest field institutes in the world and has been developing innovative robotics and intelligent software for the agriculture and environment community for over a decade.

- The ACFR’s current research is conducted for vegetable, tree crops and grazing livestock industries as well as for small-scale farming, weed detection and wildlife tracking applications.

- The ACFR’s experience in many aspects of the agricultural industry has the potential to be adapted to aspects of the grains industry.

Background

The University of Sydney’s Australian Centre for Field Robotics (ACFR) is one of the largest field robotics institutes in the world. The ACFR has a significant portfolio of both industry driven and academic projects spanning logistics, exploration, agriculture and the environment, intelligent transport systems, marine systems and security and defence.

Led by Professor Salah Sukkarieh, ACFR has been conducting research in autonomous remote sensing systems and developing innovative robotics and intelligent software for the agriculture and environment community for over a decade. Our current key research areas are:

- Development of crop intelligence and decision support systems for the vegetable industry.

- Ground and aerial platforms for the dairy and grazing livestock industry.

- Monitoring tools for managing tree crops.

- Low cost robotics for supporting agriculture on small scale farms, including those in developing countries.

- Using agricultural robotics applications for Science, Technology, Engineering and Mathematics (STEM) education.

Examples of some of ACFR’s key collaborations are shown in Figure 1.

Figure 1. Examples of ACFR’s industry collaborations.

The intent of this discussion is to share some of the cutting edge technologies ACFR are developing and to ask the grains industry if, or how, they believe these or similar technologies can be introduced to revolutionise grain farming productivity.

Research and technology themes

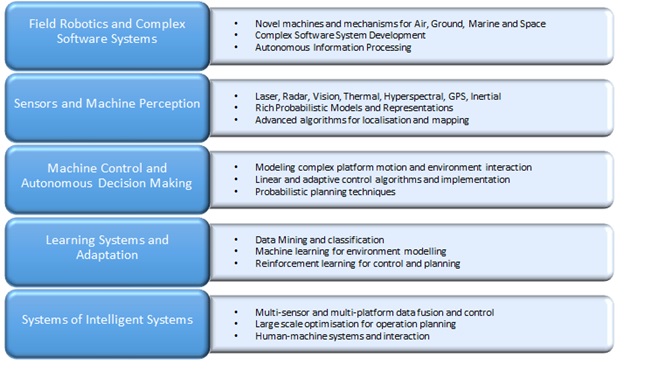

Activities at ACFR are broadly broken up into five technology themes as detailed in Figure 2.

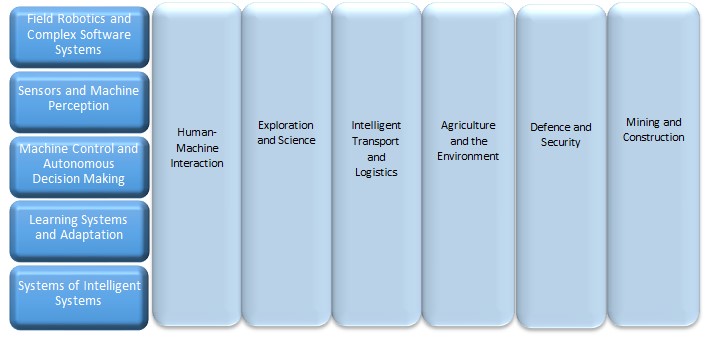

Each of these five technology themes are applied to various industries as detailed in Figure 3.

This structure demonstrates a typical breakdown of activities and the types of work that go into a typical industrial field robotics project.

Figure 2. ACFR’s research and technology themes and the industries to which they are applied.

Figure 3. The application areas and the industries to which each of the research and technology themes are applied.

Vegetable industry research

The Horticulture Innovation Centre for Robotics and Intelligent Systems (HICRIS) is a partnership between the ACFR and Horticulture Innovation Australia. HICRIS are currently delivering two projects for the vegetable industry.

Current projects

Using autonomous systems to guide vegetable decision making on farm

This project involves the research and development of sensing, automated decision support systems and robotic tools for crop interaction that will enable vegetable farmers to reduce production costs and increase productivity. The Ladybird and RIPPATM platforms are used to collect farm data and trial new systems and tools.

Evaluation and testing autonomous systems developed in Australian vegetable production systems

The testing of the technology developed in the project listed above was extended and demonstrated Robot for Intelligent Perception and Precision Application (RIPPA) in different growing regions in Australia to prove the operational effectiveness of the systems that have been developed. This work is in conjunction with project partners RMCG and TechMac Pty Ltd to undertake economic, market and intellectual property (IP) evaluations to define commercial pathways for the technology that will benefit vegetable growers.

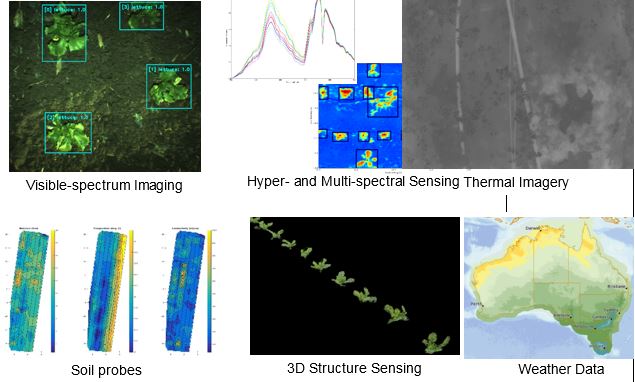

Sensing Modalities

The Ladybird and RIPPA platforms are used to collect crop data using a range of sensing modalities including:

- Visible spectrum imaging using Red, Green and Blue (RGB) cameras

- Hyper- and multi-spectral imaging.

- Thermal imagery.

- 3D structural sensing.

- Global positioning system/inertial navigation system (GPS/INS).

- Ranging (Light Detection and Ranging (LIDAR)).

As part of experimental trials, data from a weather station and static soil probes is also collected. A soil probe has been integrated with RIPPA allowing soil metrics to be collected autonomously and at a higher resolution (Figure 4).

Figure 4. Data collected with the Ladybird and RIPPA platforms used for vegetable industry research

Decision Support

The data collected by the Ladybird and RIPPA platforms will allow the development of systems to support on-farm decision making. Decision support tools include:

- Crop mapping visualisations which can highlight areas with poor crop health to aid scouting activities.

- Estimation of crop quality metrics.

- Yield estimation and predicted optimum harvest time.

Ladybird farm robot

Ladybird is a general purpose research robot that conducts various on-farm crop intelligence and crop manipulation activities. Ladybird’s rechargeable battery performs for 7–9hours and its solar panels enable continuous operation on cloudless days. The platform has numerous sensing systems, including hyperspectral, thermal infrared, panoramic vision, stereo vision with strobe, LIDAR and global positioning. These sensors allow many aspects of the crop to be measured and assessed. Ladybird also has a robotic arm, which is effective for targeted and mechanical crop manipulation (Figure 5).

Figure 5. The Ladybird farm robot on a beetroot crop in Cowra, NSW

The Ladybird’s advanced software and hardware enable its super-set of functionalities to achieve many research based farming activities. The research outcomes from Ladybird feed into the more commercially focussed and optimised robotic platform, RIPPA.

RIPPA

RIPPA is a production prototype for the vegetable growing industry and is being developed with the aim of minimising production costs and improving the marketable yield of vegetables (Figure 6).

Farm input costs such as labour and fertiliser can be reduced through crop interaction mechanisms. Mechanical weeding and Variable Injection Intelligent Precision Applicator (VIIPATM) payloads have been developed for autonomous real time weeding and spraying of weeds, crops or pests. The mechanical weeder uses RGB camera imagery and deep learning detection algorithms to identify weeds and apply a force using a steel tine to disrupt the weeds. VIIPA is used for autonomous real time variable rate fluid dispensing and has applications in targeting weeds or supplying nutrients to crops based on information collected by sensors and processed using specialised algorithms.

Figure 6. The RIPPA robot on a lettuce crop in Lindenow, VIC

Decision making tools that can be developed using the data that RIPPA collects could be used to increase the marketable yield of crops. Marketable yield is determined by the quality and quantity of a harvest. Crop data could be used to increase the uniformity of crops and crop volume at the time of harvest by providing the following information to growers:

- Identifying crops with poor health due to factors such as water stress, lack of nutrients or the presence of pests.

- Provide estimates of crop quality metrics such as size.

- Predict yield and optimum harvest time.

The key to enabling the reduction in farm input costs and improving marketable yield is ensuring that RIPPA can operate autonomously 24hours, seven days a week using battery and solar power. The solar endurance characteristics of the RIPPA system are currently being tested with RIPPA autonomously traversing crop rows and collecting crop data over extended periods of time.

RIPPA functionality has also been demonstrated in an apple orchard showing autonomous row following and changing rows, autonomous real time apple detection and targeted variable fluid dispensing using VIIPA.

Tree crops research

The ACFR has led a number of projects to develop crop intelligence for the Australian tree crop industries, including mangoes, avocadoes, apples and almonds. HICRIS is supporting research in tree crops with a project to provide decision support tools for farmers.

Current projects

Multi-scale monitoring tools for managing Australian tree crops

This collaborative project will integrate the latest imaging and robotics technologies to provide mango, avocado and macadamia farmers with decision-support tools to help improve production and profit. The data collected through this project and the tools it develops, will help farmers to predict fruit quality and yield, and monitor tree health including detection of pests and disease outbreaks.

Shrimp

The Shrimp platform was developed by the ACFR as a general purpose research ground vehicle. Shrimp contains various sensors including:

- Colour imagery (RGB cameras).

- Thermal infrared cameras.

- Hyperspectral imaging.

- GPS/INS.

- Ranging sensors (LIDAR, RADAR, stereo, time-of-flight).

- Soil conductivity and natural gamma radiation sensors.

Colour and hyperspectral imagery are used to identify crops and estimate crop properties such as flower and fruit counts and mapping using image-based object detection techniques. Ranging sensors support colour imagery sensing and vehicle navigation by determining the location and geometry of each tree. The data is also used to measure the canopy volume and light interception characteristics of every tree, which relates to fruit production. The natural gamma radiation and soil sensors allow us to further model properties of the soil.

Decision support

Imagery and other data collected using Shrimp (Figure 7) can be used to map important characteristics of the trees and their individual performance, including flowering extent and timing per tree, canopy volumes and light interception and fruit production, growth and ripening. These spatial maps allow improved farm management and decision making. Examples include:

- Combining flower timing and extent with heat-sum models allows fruit maturation dates to predict optimal harvest timing.

- Yield mapping per tree up to two months prior to harvest allows variable rate application and management.

- Yield mapping per tree for harvest labour forecasting and streamlined packing logistics.

- Fruit production, growth and ripening for detecting poor performing trees or regions in the orchard that require attention.

Measuring and modelling individual tree geometries and light interception characteristics, is working towards an automated pruning recommendation system that will suggest optimal cuts for the best fruit production outcome.

Figure 7. Shrimp on an apple orchard in Three Bridges, VIC

Grazing livestock research

SwagBot (Figure 8) was originally designed as an all-terrain cattle farming robot. SwagBot’s ability to navigate farm obstacles such as hilly terrain, water, mud and branches was successfully demonstrated at Allynbrook in June 2016. The SwagBot concept has since evolved towards a multi-purpose robot for the grazing livestock industry, with the aim to develop a platform capable of a range of farm surveillance and physical tasks.

SwagBot can autonomously navigate predefined farm routes, detect and spray weeds and collect soil samples at predefined locations. Spot spraying of weeds is a particularly labour-intensive task that is a high-priority candidate for automation, based on feedback from livestock producers.

Figure 8. SwagBot on a cattle station in Nevertire, NSW

Soil samples collected by SwagBot could be sent away to a laboratory for analysis or analysed in-situ to build soil fertility maps for improved pastures.

The ACFR is currently undertaking research supported by Meat & Livestock Australia (MLA) to further develop SwagBot autonomy for applications in pasture measurement and livestock welfare monitoring.

Small-scale farming research

Another key area of research for the ACFR is the development of low cost robotics for supporting agriculture on small scale farms, including those in developing countries. The Digital FarmHand platform (Figure 9) is based on the use of low cost sensors, actuators, computing, electronics and manufacturing techniques which will allow farmers to easily maintain and modify the platform to suit their needs. Similar to a tractor, Digital FarmHand has a hitch mechanism which allows the attachment of various implements. Several implements, including a sprayer, weeder and seeder have been manufactured for the platform. Capabilities to perform row crop analytics and allow automation of simple farming tasks are now being developed with crop data collected using a smart phone and a low cost stereo camera during field trials.

The Digital FarmHand will also be used for STEM education programme in NSW and a LAUNCH Food pilot study to understand methods for increasing food security in the Pacific Islands in 2018. In developing countries agriculture continues to be the main source of employment. The aim of the Digital Farmhand is to improve food and nutrition security for row crops farmers in Australia and abroad.

Figure 9. Initial prototype of Digital Farmhand operating in Bandung, Indonesia

Weed detection and wildlife tracking research

The ACFR has worked with unmanned aerial vehicles (UAVs) and remote sensing technology for the research and development of weed detection and classification algorithms and wildlife tracking applications. Examples of projects include those described within the following list of references:

- Optimising remotely acquired, dense point cloud data for plantation inventory (PNC377-1516 Jan 2017 – Dec 2017).

- Deployment and integration of cost-effective, high spatial resolution, remotely sensed data for the Australian forestry industry; Sukkarieh S; Forest & Wood Products Research & Development Corp. (FWPRDC)/Research Grant. 201, 2014-2017.

- Imaging technology and unmanned aerial vehicles to detect weed species; Sukkarieh S; Local Land Services/Activity Agreement, 2014-2016.

- Review of Unmanned Aerial Systems (UAS) for Marine Surveys; New South Wales (NSW) Department of Primary Industries (DPI), 2015. This project was a comparative analysis of UAS for application to coastal surveys (bather safety, shark spotting), including assessment of the regulatory environment, safety, cost and remote sensing technologies.

- Alligator Weed detection with UAVs; Fitch R, Sukkarieh S; Department of Primary Industries (Vic)/Research Support, 2013-2014.

- New detection and classification algorithms for mapping woody weeds from UAV data; Sukkarieh S; Meat and Livestock Australia Ltd/Livestock Production Research and Development Program: Strategic and Applied Research Funding. 2010-2012.

UAV platforms used

The ACFR has experience in flight operations and remote sensing for a range of platform types and can operate a range of fixed and rotary wing platforms with payloads ranging from 0.5kg to 15kg. The UAVs owned and operated by ACFR are listed in Table 1.

Table 1. UAVs owned and operated by ACFR.

Name | Type | Power | Description | Project/ application |

|---|---|---|---|---|

Brumby Mk III | Fixed wing | Internal combustion (IC) | Custom designed research testbed for sensor fusion and cooperative control, 2.8m wingspan, 15kg payload | Sensor fusion research |

J3 Cub UAV | Fixed wing | Internal combustion (IC) | Custom built 1/3 scale, medium (60min) endurance, short take-off and landing, suitable for unprepared landing strips, 15kg payload | Weed mapping |

Elimco E300 (see Fig. 1) | Fixed wing | Electric motor | Off-the-shelf survey aircraft, 60min endurance, catapult launch, belly landing, 4.8m wingspan, 4kg payload | Weed mapping |

Skywalker UAV | Fixed wing | Electric motor | Off-the-shelf, low-cost, lightweight UAV, 1kg payload, hand launch, belly landing | Internal R&D |

G18 Aeolus helicopter | Rotary wing – single rotor | Internal combustion (IC) | Modified off-the-shelf helicopter, 5kg payload | Weed mapping, spraying |

Mikrokopter Hexacopter | Rotary wing – multirotor | Electric motor | Off-the-shelf drone for research/industrial work, 2kg payload | Weed mapping |

AscTec Falcon8 (see Fig. 2) | Rotary wing – multirotor | Electric motor | Off-the-shelf industrial drone, 0.8kg payload | Forestry, weeds, animal tracking |

3DR Solo | Rotary wing – multirotor | Electric motor | Consumer drone, 0.5kg payload | Internal R&D, aerial photography, pilot training |

Potential applications in the grains industry

The agricultural systems ACFR has developed to this point focus primarily on horticulture and grazing livestock. However, from a technological standpoint these systems can be systematically re-used and adapted towards the grains industry for similar tasks such as robotic weeding, spraying and decision support.

Conclusion

As one of the world’s leading field robotics groups, the ACFR has introduced transformational technologies into many industries with agriculture being a growing area of research and focus for commercialisation. Our experience in many aspects of the agricultural industry has the potential to be adapted to aspects of the grains industry should there be sufficient R&D support to do so. The intent of this discussion is to share some of the cutting edge technologies ACFR are developing and to ask the grains industry if, or how, they believe these or similar technologies can be introduced to revolutionise grain farming productivity.

Contact details

Mark Calleija

Australian Centre for Field Robotics, The University of Sydney

+61 413 588 311

mark@acfr.usyd.edu.au

Was this page helpful?

YOUR FEEDBACK