Advances in weed recognition: the importance of identifying the appropriate approaches for the development of a weed recognition algorithm for Australian cropping

Author: Michael Walsh, Asher Bender and Guy Coleman (University of Sydney) | Date: 10 Feb 2022

Take home messages

- Effective in-crop weed recognition requires many tens of thousands to millions of annotated images of weeds in crop scenarios.

- Weed-AI enables the open-source publication and compilation of annotated weed images.

- A large publicly available annotated weed image database enables the development and introduction of alternative weed control technologies for Australian grain production systems.

Background

Widespread herbicide resistance has reinforced the need for alternative weed control techniques in Australian cropping systems. Apart from HWSC (Walshet al. 2013), there has been a general lack of development of alternative weed control practices suitable for use in conservation cropping systems. Critically, herbicides remain the only option for in-crop use during the important early post-emergence phase where weed control is essential for yield preservation. A recent comparison of weed control techniques highlighted the unacceptably high energy requirements of alternative practices when used as conventional “blanket” treatments (Colemanet al. 2019). This research did, however, highlight that there were equivalent energy requirements for chemical and non-chemical techniques when they were applied site-specifically to weed targets. In-crop site-specific weed control (SSWC) does require the development of accurate weed recognition.

Advances in weed recognition capability have created the potential for in-crop SSWC in Australian grain cropping systems. In particular, substantial improvements in computational power and machine-learning (ML) performance have resulted in the development of potentially highly effective RGB camera-based weed recognition systems (Fernández-Quintanillaet al. 2018). These systems are well suited to the complex task of accurate in-crop weed recognition that subsequently enables the in-crop site-specific delivery of weed control treatments (Wanget al. 2019). The availability of suitably accurate in-crop weed recognition creates the opportunity to selectively target weeds with non-selective physical and thermal weed control treatments, thereby expanding the options for in-crop weed control. Depending on the weed density, a SSWC approach enables growers to reduce inputs of weed control treatments, chemical and non-chemical, by up to 90%, and to lower the agronomic and environmental risks associated with some weed control treatments (Timmermannet al. 2003). The opportunity for substantial cost savings and the introduction of a wide range of novel control tactics are driving the future of weed management towards site-specific weed control (Keller et al., 2014).

ML-based weed recognition relies on the availability of suitably collected and annotated weed images for the development of recognition algorithms. The performance of in-crop weed recognition algorithms is completely reliant on an appropriate image database of the target weeds in the relevant crops. At present, commercial development of weed recognition algorithms by weed control companies in Australia (for example, Autoweed, Bilberry, Alterratech) necessitates the independent collection and annotation of images of individual weeds for specific crops. It is estimated that, for each of these scenarios, between 10 000 and 1 000 000 suitably annotated images may be required for suitably accurate weed recognition algorithms. The larger and more visually diverse (for example, weather, lighting, growth stage, plant health) the dataset, the better the likelihood that the algorithm will recognise weeds in diverse field conditions. Given that preliminary efforts on Australian grain crop weed recognition algorithm development have achieved only modest levels of accuracy (60%) for simple scenarios, such as brassica weeds in cereals, it is expected annotation requirements will be at the mid to upper end of this estimate (Suet al. 2021). The development of weed recognition capability is an onerous task and a major impediment to individual weed control companies looking to implement site-specific weed control technologies in Australia.

The aims for this research were to:

- develop and evaluate weed recognition algorithms for annual ryegrass (Lolium rigidum), turnip weed (Rapistrum rugosum) and sowthistle (Sonchus oleraceus) in wheat and chickpea crops

- establish an open-source weed recognition library to facilitate the introduction of weed recognition technologies for Australian cropping.

Methods

Image collection

Approximately 2000 images of wheat and chickpea were collected in Narrabri and Cobbitty (NSW) during the winter growing seasons of 2019 and 2020 with FLIR Blackfly 23S6C and 70S7C cameras. The dataset spans two growing seasons, variable backgrounds, lighting conditions (natural and artificial illumination) and growth stages. The images were annotated with bounding boxes to identify annual ryegrass and turnip weed. The algorithms were trained on 80% of the data with 20% reserved for testing.

Algorithm training

To demonstrate cutting-edge object recognition algorithms in weed recognition tasks, we selected three state-of-the-art architectures, You Only Look Once (YOLO) v5 (June 2020), EfficientDet (June 2020) and Faster R-CNN (2015). Object detection algorithms provide both weed location within the image by a box around the weed and the species of weed.

The algorithms were tested on the three data scenarios.

The leading algorithm architecture out of that testing, YOLOv5, was tested at two image resolutions (640 x 640 and 1024 x 1024) and two algorithm sizes (YOLOv5-S and YOLOv5-XL). YOLOv5-S has 7.3 million parameters and YOLOv5-XL has 87.7 million parameters. The theoretical advantage of larger networks is their ability to learn richer and more subtle structures from the data. The practical disadvantage is that these algorithms require more data to train and more memory and computations to execute. As a result, we were unable to train the YOLOv5 XL algorithm on the 1024 x 1024 image size from lack of available memory.

It is important to draw conclusions about network size and image resolutions amongst comparable algorithms. The architecture of an object detector can have an impact on its 'efficiency' of performance. The Faster R-CNN object detector with a ResNet-50 backbone is the second largest algorithm with 41.5 million parameters. Despite having a theoretical size advantage, five years of research and algorithm innovations have produced more compact networks with higher performance, such as YOLOv5.

Understanding weed recognition performance

Determining the performance of recognition algorithms is a nuanced task, not simply a case of measuring the number of times the algorithm correctly finds a weed. In cases where weeds are extremely rare, a classifier that never detects any weeds will be right most of the time and measure as highly accurate, where accuracy is how many correct detections are made. Yet, this classifier will produce false negatives wherever weeds are missed. Conversely, if an algorithm always detects weeds, it will never miss a weed but it will produce many false positives, where the background is incorrectly labelled as a weed, resulting in poor accuracy. Algorithms that are more sensitive and label all objects as weeds will have a high recall (lots of false positives) but because they are incorrect much of the time, will have low precision. On the other hand, models that are less sensitive and miss more weeds and only pick out clear examples will have a low recall (lots of misses) but will likely have very high precision. Knowing both of these values helps describe how well an algorithm is working.

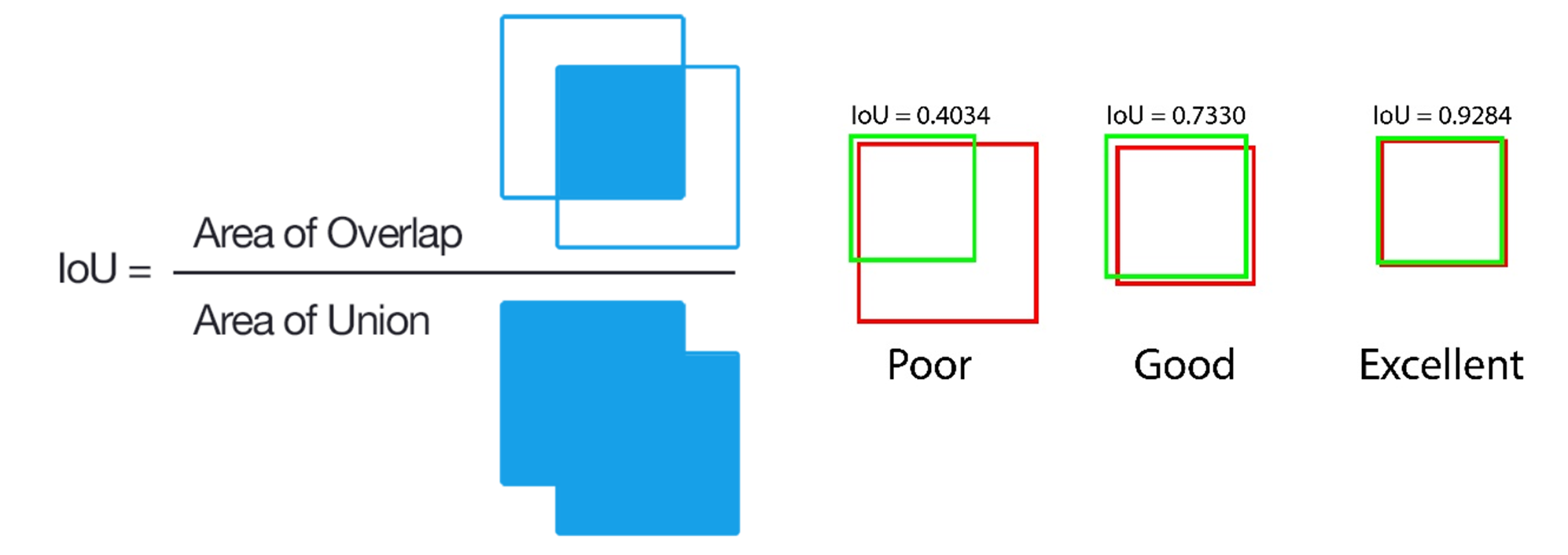

Figure 1. Diagrammatic explanation of intersection over union (IoU) metric that is used to identify the localisation accuracy performance of a recognition algorithm.

Measuring performance in object detection is complicated further by the requirement to give a position of the weed within the image. Whilst accuracy, precision and recall measure the performance of correct-vs-incorrect classifications (namely, weed vs. no weed), they do not measure how well the algorithm has located the weed within the image. The performance of localisation is measured using intersection over union (IOU) (Figure 1). This measures the similarity between the location of a ground-truth weed bounding box and a predicted bounding box.

Bringing precision, recall and IoU together, mean average precision (mAP) is used as a more robust way of determining algorithm performance. This is done by calculating the precision and recall of the object detector at various localisation (IoU) thresholds and returning an averaged result. Note that although mAP rather misleadingly implies that only precision is accounted for, both precision and recall are used within the calculation. In short, mAP measures how well an object detector generates relevant detections (balanced precision and recall) and how well these detections are localised. The measure ranges from 0 to 1, with state-of-the-art algorithms producing a mAP of up to 0.5 on difficult datasets.

Results and discussion

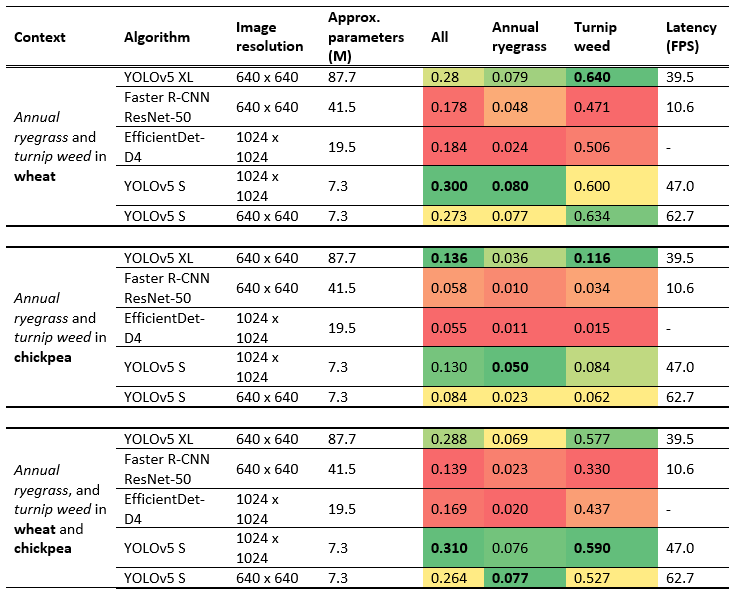

Weed type, background crop type, algorithm size and image size influenced algorithm performance (Table 1). The best performance across all three classes in the combined dataset was YOLOv5 S 1024 x 1024 with a mAP of 0.310. It is likely the YOLOv5 XL trained on 1024 x 1024 images would have outperformed this algorithm based on an S vs XL comparison at the 640 x 640 image size, however, memory constraints meant training this algorithm was not possible. YOLOv5-XL (640 x 640) had a higher performance than YOLOv5-S (640 x 640) in all datasets. The performance advantage across the datasets is minor in most cases, despite YOLOv5-XL being twelve times as large as YOLOv5-S.

Table 1: Results from YOLOv5 XL, YOLOv5 S, Faster R-CNN ResNet-50 and EfficientDet-D4 deep learning architectures at two different image resolutions, 640 x 640 and 1024 x 1024. Each algorithm was trained on three scenarios, weeds in wheat, weeds in chickpea and weeds in both wheat and chickpea. Mean average precision (mAP) results are reported with inference speed in frames per second (FPS). Cells coloured green indicate better performance. Bolded text indicates highest performance in that scenario. Cells coloured red indicate worse performance and are relative to each dataset.

With the dataset containing more images of weeds in wheat crops, better performance in detecting annual ryegrass was achieved in the wheat and the mixed wheat/chickpea datasets than the chickpea only dataset. Although annual ryegrass looks very similar to wheat, most of the wheat images were taken in early growth stages where the canopy had not closed. This provided scenes with low background clutter and occlusions. Chickpeas have a sprawling habit with lots of fine leaf structures. This phenotype produces cluttered scenes capable of obscured targets, making object detection more difficult. These two factors contributed to lower annual ryegrass performance in the chickpea dataset.

Changing the size of the image being passed through the object detector network has a bigger effect on performance than algorithm size. In all cases, YOLOv5-S with 1024 x 1024 inputs outperformed YOLOv5-S with 640 x 640 inputs. YOLOv5-S with 1024 x 1024 was also able to outperform YOLOv5-XL with 640 x 640 inputs in two-thirds of the datasets. This indicates that, for a given network architecture, increasing the image size may have greater benefits than increasing the network size for weed recognition.

Development of Weed-AI

Access to a shared repository of suitably labelled in-crop weed imagery with standardised metadata reporting would fast-track the development of weed recognition algorithms for Australian grain cropping systems. Further, it would provide opportunities for machine learning researchers around the world to develop new architectures based on Australian weeds. A close look at currently available open databases and repositories in a wide field of subject areas with varying modes of contribution, access and management were used towards identifying a suitable open-source platform design for weed imagery. This information enabled the definition of data requirements and recommendations for the Weed-AI platform.

Weed-AI: an open-source platform for annotated weed imagery

Weed-AI fills the data access and metadata standardisation gap, operating on an open-source platform, allowing upload, browsing and download of datasets. Anyone can contribute to the platform, and importantly, the datasets are maintained under the Creative Commons CC-BY 4.0 license with the license held by whoever is specified during the upload process. A major advancement in the standardisation of metadata reporting was the development of the AgContext information, a whole of dataset file that provides information on key attributes (Table 2). Datasets are searchable and indexed by a large selection of the AgContext information. The individual weeds annotated in each image are linked to a scientific name and standardised common name. Weed-AI also supports higher level taxonomical groupings of weed classes to cater for more general categories such as ‘grasses’ or ‘broadleaves’. Often, determining the specific species of a weed is difficult when annotating and weeds are instead grouped at the genus or family level. Together, these tools help standardise contributions of images and annotations.

Table 2: Details of the AgContext metadata standard that is required for every dataset uploaded to WeedID. This information is on a whole-of-dataset level and should be filled out as representative of the averages for each field for the entire dataset. The web form can be accessed here.

AgContext JSON ID | Entry Options | Description |

|---|---|---|

crop_type | Grain crop (one of 18 pre-filled options) Other crop Not in crop (pasture or fallow) | Details the dominant crop type in the images uploaded. |

bbch_growth_range | Minimum to maximum growth stage range using the BBCH scale. | Two values that describe the crop growth stage. |

soil_colour | not visible, black, dark brown, brown, red brown, dark red, yellow, pale yellow, white, grey | Generalised description of the visible soil colour in the images based on the Mansell scale. |

surface_cover | Cereal, oilseed, legume, cotton, black plastic, white plastic, woodchips, other, none. | Background surface cover and type visible behind any plants. Where a stubble is present, select a likely crop (for example, cereal, oilseed or legume). |

surface_coverage | Per cent cover:

| An estimate of the per cent cover of the soil/background by the surface_cover variable provided above. |

weather_description | Describe key features of the weather during collection. Include details such as:

| Sunlight and shadows can have large impacts on image data. Capturing this information is important to cover all different detection conditions. |

location_lat | Latitude – decimal degrees | Location of where the dataset was collected including the latitude, longitude and the datum used. |

location_long | Longitude – decimal degrees | |

location_datum | EPSG code for the spatial reference system used. | |

camera_make | Free text | Include details on the make/model of the camera used in collection. For phone cameras, include the phone type/brand as well. |

camera_lens | Free text | Include details on camera lens make/model. Phone cameras should have lens information available online. If not available, simply include phone make/model. |

camera_lens_focallength | Integer | Focal length of the camera/lens. |

camera_height | Integer | Image collection height in millimetres (mm) above ground. |

camera_angle | Integer | Image collection angle (degrees from horizontal). For example, a camera pointing straight down would be 90 degrees. |

camera_fov | Integer (1 – 180) | A number representing the angle captured by the camera across the diagonal of an image, measured in degrees. |

photography_description | Free text | Provide a general description of the data collected. Any important information that would be useful or is critical to the dataset that was missed. |

cropped_to_plant | True/False | Are the images cropped to every plant? |

Contributing to Weed-AI is straightforward, with the five-step process listed below, summarised here:

- Collect images.

- Annotate images in COCO or VOC format.

- Complete the AgContext file

- Complete the metadata file

- Upload via the Weed-AI uploading tool

Following successful upload, a review stage helps maintain the quality of the uploaded data. There are currently 12 datasets uploaded covering image classification and bounding box annotations with a total 18,367 images. Crops include wheat, chickpea, canola, lupins and cotton with weeds such as annual ryegrass, turnip weed, wild radish, blue lupins and sowthistle.

Conclusion

The fundamental component of an effective weed recognition algorithm is the quality and quantity of the image data it is provided. Preliminary results for the recognition of annual ryegrass and turnip weed in wheat and chickpea highlight the difficulty of weed recognition in crop scenarios. Weed-AI is an important tool to address this data gap, providing an entirely open-source opportunity for the upload, searching and download of weed image data for the development of Australian-relevant weed recognition algorithms.

Acknowledgements

The research undertaken as part of this project is made possible by the significant contributions of growers through both trial cooperation and the support of the GRDC, the author would like to thank them for their continued support.

References

Coleman GRY, Stead A, Rigter MP, Xu Z, Johnson D, Brooker GM, Sukkarieh S, Walsh MJ (2019) Using energy requirements to compare the suitability of alternative methods for broadcast and site-specific weed control. Weed Technology 33(4), 633-650.

Fernández-Quintanilla C, Peña JM, Andújar D, Dorado J, Ribeiro A, López-Granados F (2018) Is the current state of the art of weed monitoring suitable for site-specific weed management in arable crops? Weed Research 58(4), 259-272.

Keller M, Gutjahr C, Möhring J, Weis M, Sökefeld M, Gerhards R (2014) Estimating economic thresholds for site-specific weed control using manual weed counts and sensor technology: An example based on three winter wheat trials. Pest Management Science 70:200-211

Su D, Qiao Y, Kong H, Sukkarieh S (2021) Real time detection of inter-row ryegrass in wheat farms using deep learning. Biosystems Engineering 204, 198-211.

Timmermann C, Gerhards R, Kühbauch W (2003) The economic impact of site-specific weed control. Precision Agriculture 4(3), 249-260.

Walsh MJ, Newman P, Powles S (2013) Targeting weed seeds in-crop: a new weed control paradigm for global agriculture. Weed Technology 27(3), 431-436.

Wang A, Wei X, Zhang W (2019) A review on weed detection using ground-based machine vision and image processing techniques. Computers and Electronics in Agriculture 158, 226-240.

Contact details

Michael Walsh

Werombi Road, Brownlow Hill NSW 2570

0448 847 272

m.j.walsh@sydney.edu.au

GRDC Project Code: UOS1703-002RTX, UOS2002-003RTX,

Was this page helpful?

YOUR FEEDBACK